Wan 2.6 vs Sora 2: Which AI Video Model Fits You?

The AI video field has advanced fast in 2025, shifting from simple clips to distinct creative philosophies. Sora 2 is OpenAI's cinematic "world simulator," known for physics-driven storytelling and artistic interpretation. Wan 2.6 is Alibaba's precision-focused "production powerhouse," built for speed, accuracy, and commercial reliability. Whether you need emotional cinematic depth or consistent, literal results, understanding their differences is key to choosing the right tool.

Wan 2.6 vs Sora 2: Core Differences

Before diving into the technical details, it is helpful to see how these two models fundamentally differ in philosophy and target user.

| Feature | Wan 2.6 (Alibaba Tongyi Lab) | Sora 2 (OpenAI) |

|---|---|---|

| Core Philosophy | Practical Production: Efficiency, literal accuracy, and structured workflows. | World Simulator: Cinematic interpretation, deep physics, and atmospheric storytelling. |

| Target Audience | Marketers, Influencers, Educators, Developers. | Filmmakers, Storytellers, Social Media "Pro" users. |

| Key Strength | Literal Accuracy & Speed: Delivers exactly what is prompted with high consistency. | Cinematic Flair: Interprets prompts with dramatic lighting, mood, and complex camera moves. |

| Video Length | Up to 15 seconds (native). | 10s - 60s (native with multi-shot). |

| Audio | Phoneme-level Lip-Sync: Superior for "talking head" and dialogue videos. | Cinematic Soundscapes: Excellent ambient audio and sound effects. |

| Availability | Open weights (Wan 2.5), Wan 2.6. | Invite-only App (iOS), sora.com for Pro users. |

What Is Alibaba Wan 2.6?

Alibaba Wan is a free, high-performance AI video generation platform powered by the standout Wan 2.1 model, designed to effortlessly transform text and images into watermark-free, fully produced videos with native bilingual text support while running efficiently on consumer hardware like the RTX 4090.

While Wan 2.1 established top-tier benchmarks for quality and accessibility, the series has now evolved into its latest iteration, Wan 2.6, which elevates the platform from a generation tool to a professional production powerhouse focused on delivering granular control and specific, high-fidelity outcomes for serious creators.

1. Video Generation: Literal, Efficient, and Narrative-Driven

✔️ Literal Accuracy: Wan 2.6 excels at following instructions to the letter. If you prompt for "a chef slicing vegetables in a modern kitchen," it delivers exactly that - clean composition, balanced lighting, and zero creative drift. This predictability makes it the ideal choice for commercial projects where adherence to a brief is non-negotiable.

✔️ Extended Narrative & Speed: Supporting up to 15 seconds of generation, the model introduces "multi-shot narrative" capabilities. It optimizes pacing to eliminate the uncanny "frozen stare" of single-shot AI videos, all while rendering significantly faster than competitors like Sora 2, making high-volume content cycles (ads, social media) viable.

2. The "Killer App": Advanced Reference Character & Identity

✔️ True Video-to-Video Reference: Going far beyond simple AI face-swaps, Wan 2.6 allows you to input a reference video (whether a real person, a robot, or a cartoon like Crayon Shin-chan). The model extracts both appearance and voice features to generate entirely new content.

✔️ Unrivaled Identity Retention: It maintains rock-solid character consistency across different clips - even during complex head turns or expressive acting. This is a game-changer for influencers, brand mascots, and narrative series.

✔️ Complex Interaction: You aren't limited to the original footage's context. You can command your reference character to perform completely new actions (e.g., "stir-frying bricks") or interact with other generated entities (e.g., a human and a bear sharing a shot) with seamless integration.

3. Audio-Visual Breakthroughs: Sync and Song

✔️ Phoneme-Level Lip-Sync: Wan 2.6 offers a distinct advantage in "talking head" videos. It achieves high-fidelity lip-sync where mouth shapes match actual phonemes, capturing micro-gestures like eyebrow lifts. If your reference video lacks audio, the model can even generate a matching voice automatically.

✔️ Full-Structure Music Generation: The model can generate complete 3-4 minute music tracks with a proper structure (Intro, Verse, Chorus, Outro). Users have granular control over genre, mood, instruments, and language (Chinese, English, Japanese, Korean), though specific voice cloning for singing is currently a limitation.

4. Design & Graphic Capabilities

✔️ Text-Image Mixing: Similar to tools like Nano Banna, Wan 2.6 supports intelligent text-image layouts, allowing for the generation of posters or illustrations where text is logically embedded into the visual design.

✔️ Multi-Subject & Stylization: It boasts significantly improved consistency for scenes with multiple distinct characters (ideal for comics or storyboards) and an enhanced ability to replicate specific cultural IPs (e.g., Sanxingdui artifacts) or art styles with precision.

What Is Sora 2?

Sora is OpenAI's groundbreaking text-to-video AI model, designed to transform written prompts into highly realistic and imaginative video clips, serving as a versatile tool for filmmakers and creators to visualize complex concepts, remix existing footage, and explore new frontiers in entertainment and simulation.

Built on a diffusion architecture that understands physical interactions, Sora has now advanced to its state-of-the-art iteration, Sora 2, which OpenAI describes as the "GPT-3.5 moment for video" - introducing a dedicated social paradigm with "AI Cameos" and significantly deepening its capabilities as a "World Simulator" that accurately models physics and real-world dynamics.

1. Cinematic Interpretation & "World Simulation"

✔️ The Filmmaker's Eye: Unlike models that literally transcribe text to video, Sora 2 interprets prompts with artistic intent. A simple instruction can yield a scene with dramatic lighting, dynamic camera sweeps, and sophisticated emotional tonal shifts.

✔️ True Physics Engine: It attempts to obey the laws of physics rather than morphing pixels. It simulates gravity, buoyancy, and collisions - if a basketball player misses a shot, the ball rebounds realistically off the rim instead of teleporting into the net.

✔️ Modeling Failure: Crucially, the model understands that actions can fail. This ability to simulate mistakes adds a layer of "lived-in" realism that is essential for a true world simulator.

👉️ If you need more guides related to Sora 2 prompts, please read: Complete Sora 2 Prompt Guide: Tips for Stunning Video Creation

2. The Social Paradigm: Characters & Remixing

✔️ "Characters" Feature: Sora 2 introduces a consumer-facing "killer app" feature: users can "upload themselves" via a one-time video/audio recording to create a digital twin. This allows you to drop yourself directly into any generated scene.

✔️ Remix Culture: The dedicated iOS app fosters a TikTok-style ecosystem where users are encouraged to remix and build upon each other's generations, making AI video a social experience.

✔️ Safety First: The platform is heavily moderated to prevent deepfakes, ensuring strict control over user likeness and safety.

3. Visual Fidelity & Simulation Accuracy

✔️ Atmosphere & Detail: Sora 2 leads the industry in photorealistic detail and atmospheric depth. Cloth flows naturally, and environmental effects like dust, fog, and wind feel authentic. It excels at creating complex interactions (e.g., gymnastics, ice skating) where objects possess palpable weight and momentum, aiming for Simulation Accuracy over simple visual appeal.

4. Workflow & Positioning

✔️ Prompt-Heavy & Expressive: While Wan 2.6 thrives on specific inputs, Sora 2 is designed for "fun" and exploration via text prompting. It rewards creative phrasing with cinematic brilliance.

✔️ Premium Positioning: Positioned as a high-end tool, access is primarily via ChatGPT Pro ($20+/month) or the invite-only app, reflecting the higher compute costs associated with its advanced world simulation capabilities.

👉️ To get the Sora 2 invite code efficiently, please read: How to Get a Free Sora 2 Invite Code: 5 Proven Methods

👉️ To access Sora 2 models for AI creation, please try the Sora 2 image generator.

Wan 2.6 vs Sora 2: Head-to-Head Comparison

Choosing between Wan 2.6 and Sora 2 is about purpose, not just quality.

🌟 Wan 2.6 is a precision production tool - fast, literal, and highly consistent for creators who need exact results.

🌟 Sora 2 is a cinematic world simulator, focused on rich physics, artistic interpretation, and storytelling freedom.

The table below highlights how each model’s features reflect these different philosophies.

| Feature | Wan 2.6 (Alibaba) | Sora 2 (OpenAI) |

|---|---|---|

| Core Philosophy | Production Powerhouse (Literal & Efficient) | World Simulator (Cinematic & Interpretive) |

| Best For | Commercials, Education, Influencers, Ads | Filmmaking, Storytelling, Social Entertainment |

| Prompt Adherence | Literal Accuracy: Follows instructions exactly with zero creative drift. | Cinematic Interpretation: Adds dramatic lighting, mood, and "director's flair." |

| Identity Control | Video-to-Video Reference: Extracts exact look/voice from a reference video. Unrivaled consistency. | "Characters" (Cameos): One-time scan to insert a user's digital twin into scenes. |

| Physics & Motion | Visual Stability: Crisp, artifact-free motion; excellent for specific actions. | True Physics Engine: Simulates gravity, collisions, and "failure" (e.g., missed shots). |

| Audio | Phoneme-Level Lip-Sync: Perfect for talking heads. Generates full songs (Verse/Chorus). | Cinematic Soundscapes: Realistic ambient sounds, SFX, and atmospheric audio. |

| Speed & Hardware | Fast & Efficient: Runs on consumer GPUs (e.g., RTX 4090). Viable for high volume. | Compute Heavy: Slower generation due to complex simulation. Cloud-based/Premium. |

| Text/Design | Text-Image Mixing: Embeds logical text into images/posters. | N/A: Primarily focused on video/world simulation. |

| Access | Free / Open-weight heritage | Premium (ChatGPT Pro / Invite-only iOS App) |

WAN 2.6 vs Sora 2: Which One Should You Choose?

Wan 2.6 challenges Sora 2 precisely because it targets a different set of priorities.

👉️ Choose Wan 2.6 if: You are a marketer, educator, or influencer who needs a reliable, fast workhorse. You value speed, identity consistency, and perfect lip-sync for spoken content. You want a tool that "listens" to your instructions literally.

👉️ Choose Sora 2 if: You are a filmmaker or storyteller aiming for emotional impact and cinematic realism. You want to create immersive worlds, sophisticated narratives, or enjoy the social experience of "remixing" content with friends.

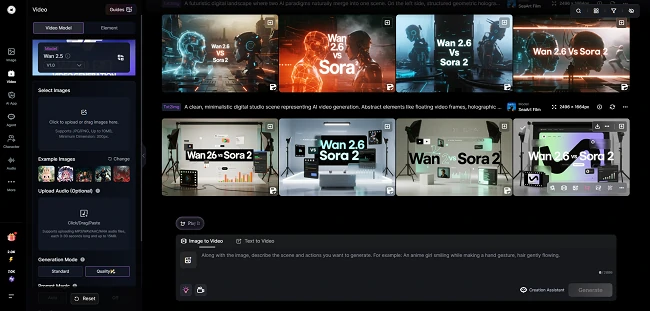

How to Use Wan 2.6 for Free

The era of "good enough" is over. Whether you need the structured precision of Wan 2.6 or the creative magic of Sora 2, SeaArt AI offers a specialized tool for every creator.

As a world-class AIGC platform, SeaArt AI offers users a complete one-stop creative ecosystem. From image and video generation to audio creation and even AI character chats, it brings together top global models - including Wan 2.6, Gemini 3, Sora 2 and Nano Banano Pro. Here, AI creation and immersive conversational experiences are always within reach. Now you can follow the simple steps below to access Wan 2.6 in a few clicks:

Step 1. Enter the AI video generation page on SeaArt AI and choose the model you need.

Step 2. Upload your prompt and image, then click "Generate" to start. If you're not satisfied with the generated result, you can refine your prompt or adjust the uploaded image to optimize the video output and achieve a result you're happy with.

Summary

Choosing between Wan 2.6 and Sora 2 isn't about which is "better," but which fits your goal. Wan 2.6 excels in speed, perfect lip-sync, and consistent character control for commercial or educational work. Sora 2 shines in cinematic storytelling, physics-rich scenes, and creative social remixing.

The best part: you can try both today on SeaArt AI. As an all-in-one AIGC platform, SeaArt AI unifies top global models - including Wan 2.6, Sora 2, and Gemini 3 - so you can effortlessly test precise production workflows or explore cinematic world simulation in one place.